When product and engineering teams are small and tight knit, they often share an unspoken and undocumented set of principles that guide their development. The principles arise organically through discussions, code reviews, and product development lifecycle ceremonies.

But when teams grow beyond the second Dunbar number (15), the organic development and diffusion of principles becomes difficult. Add high turnover or rapid growth in the organization and the problem is exacerbated. The absence of commonly applied principles across the organization results in a high variability of quality, availability, scalability, cost of operations and cost of development. We noticed this problem across several clients in our first two years as a company, and when we launched the first edition of The Art of Scalability we dedicated a section just to architectural principles. While we still use these principles in our practice, and while they apply to all types of implementations including microservice solutions, we never modified them to speak directly to the needs of fine-grained service architectures.

Microservices offer a great many benefits to organizations as both the organization and the architecture grows and scales. Whether you are refactoring a monolithto engender better cost of scale within your organization, building a new solution from scratch or just trying to determine if service disaggregation is for you having a set of principles to guide discussion and development will help.

The principles below are a mix of our original architecture principles (each of which still apply even if not present below), in addition to some new principles dealing with the unique needs of products comprised of services. Many of the principles also have specific design patterns to help with implementations and some have anti-patterns to avoid at all costs. The anti-patterns exist to show what types of interactions will violate the principle to the detriment of one or more non-functional requirements like availability, scalability and cost of either operation or development.

- Size Matters – Smaller isn’t always better

The prefix “micro” within microservices is an unfortunate one, as are many of the online references that a microservice should be tiny and do “only one thing”. Such advice makes absolutely no sense as “small in size” is meaningless without an understanding of the business return associated with the size. We prefer to evaluate size of a microservice along the dimensions of desired developer velocity, context, scalability, availability and cost.

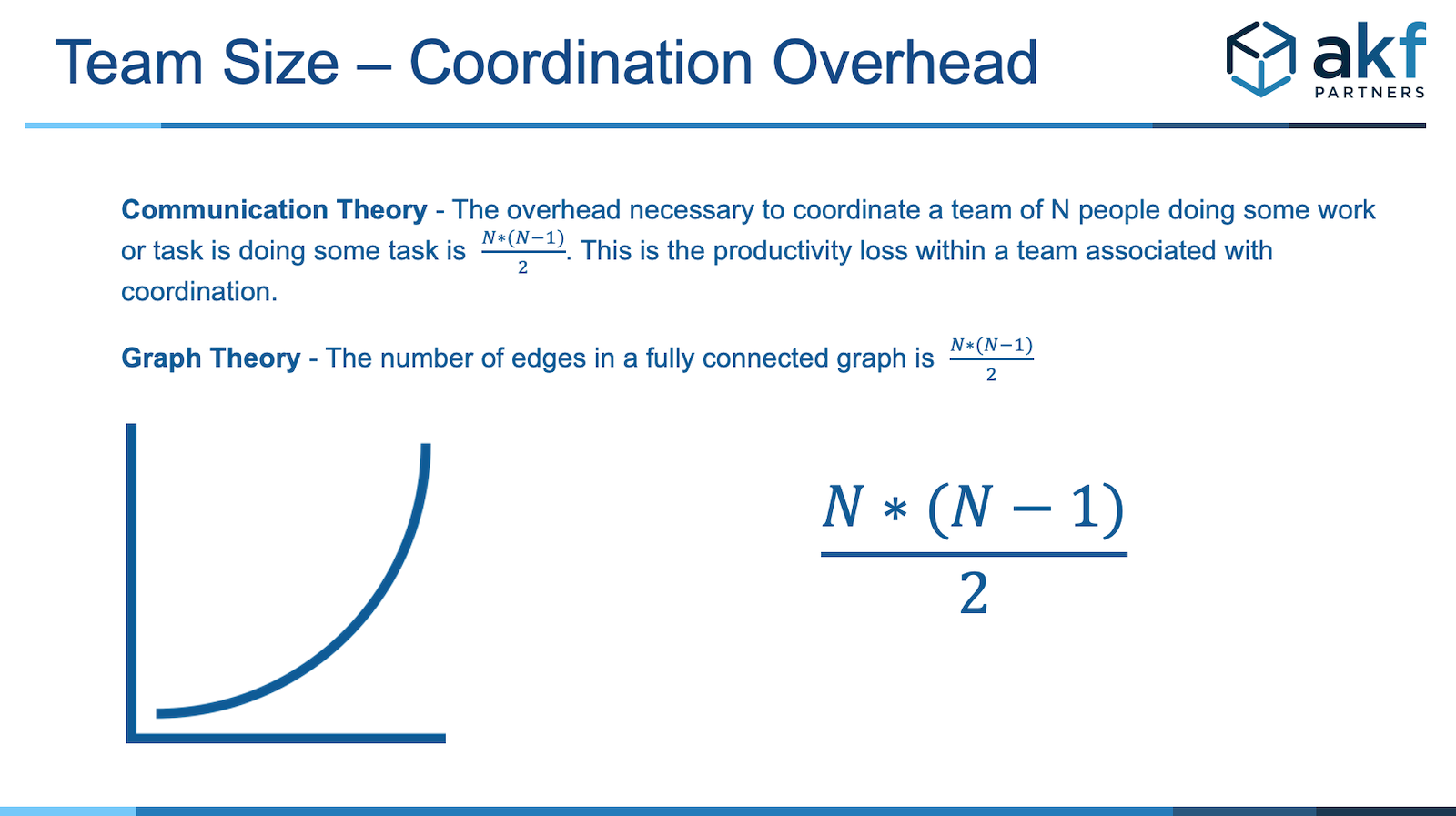

Developer Velocity: One of the most important benefits of service separation is team velocity. As teams grow, the overhead of coordination cost between team members working on a single service grows as a square of team size. As such there is great benefit in splitting the code base to engender higher velocity, but that assumes each service is owned by a single team. When a single team owns more than one service, we do not significantly increase velocity and in fact if a team owns too many services, we can decrease velocity by requiring team members to context switch between services and repositories. We often indicate that a team should own no more than 3 services and that no two teams should own the same service.

Context: Context, potentially with the help of the development of domains (as in domain driven design) is important in identifying components that can be separated. Within an enterprise resource planning (ERP) system, the notion of invoicing is clearly a separate context from payroll. While both represent information represented within the income statement, statement of cash flow and balance sheet, there is enough separation that the two can be within separate services. None of this argues that they must be in separate services, but rather that they are good candidates for separation. Always consider the customer perspective – developing from the customer back rather than the engineer forward – when defining context related boundaries for services. A corollary to this is that some things should not be split.

There are other considerations including paying for and enabling nonfunctional requirements in certain elements. Invoicing, payroll and accounts receivable services may deserve to have a larger number of instances for the services and associated databases than say reporting for instance. In these cases, it may make business sense to split them away from other services such that they can be scaled and made highly available separately. A longer discussion on the appropriate size of a service can be found in our post on service size here.

Summary- Not everything needs to be small - it doesn’t matter that it “does one thing”. Doing “one thing” isn’t highly correlated with business value and if taken to an extreme, resulting in overly small services it can destroy business value by increasing cost of development and decreasing availability.

- Services should be bounded by both context and team size.

- Consider contexts and domains from the customer back – not the engineer forward.

- One or zero databases per service

This is a simple one. In designing services, each service should have at most one database or persistence tier solution. When a service must communicate with more than one database synchronously, the probability of failure increases. Correspondingly, the availability of that service decreases as it is now tied to the availability of both database A and database B rather than just the availability of a single database. There is no rule that a service MUST have a persistence tier, hence the binary rule of "1 or 0".

Anti-Pattern: Database fan out

- No synchronously shared databases

Recall the coordination graph above. If we split services with each service assigned to a separate team but those teams and services share a common database, what velocity have we enabled? Teams must still coordinate through any modifications to the database or persistence tier. #velocityfail

Anti-Pattern: Database Fuse

- Services in breadth, never in depth

Chaining or coupling services in series (where Service A calls Service B and blocks responses to the requesting user until such time as B responds to A) increases latency and decreases availability. Each such call, if not bound locally through a sidecar implementation, must transit network devices. Each such device has an additive probability of failure in addition to the probability of failures of the two independently running services. Synchronous service to service calls should be avoided if possible.

Anti-Pattern: Services in series

Anti-pattern: Service aggregator

Anti-pattern: Service fuse

Corollary: Services for breadth, Libraries for depth

Helpful Pattern: Sidecar Pattern More information on this principle.

- Fault isolate services for maximum availability

In traditional microservice architecture language, the “bulkhead” pattern helps to contain the blast radius of a failure. We called such a concept fault isolation before microservices patterns came to pass and they are necessary in any high scale multi-tenant solution that hopes to create an appropriate level of availability. Note that to properly isolate, you must ensure that no synchronous (blocking) communication happens beyond the perimeter of the fault isolation “swimlane” or fence. This includes the notion of synchronous replication – any data transferal over a fault isolation (bulkhead,swimlane) boundary must be eventually consistent to avoid fault propagation. Additional steps can be taken to reduce the probability of failure with the introduction of circuit breakers that break synchronous communication gracefully within a fault isolation or bulkhead boundary. Below are a list of patterns to help with fault isolation, or to decrease the probability of failure within a fault isolation boundary as well as a number of anti-patterns to avoid at all cost.

Pattern: Bulkhead Pattern

Pattern: Circuit Breaker Pattern

Pattern: Saga Pattern

Anti-pattern: Service Fan Out

Anti-pattern: Cals in series

Anti-pattern: Service Fuse

Anti-pattern: Mesh

- Loose Coupling with Asynchronous Interaction

Whenever possible, AKF prefers to make communications between services non-blocking and asynchronous. This is an absolute necessity for any communications across a bulkhead or fault isolation boundary, and a nice to have when services communicate within a fault isolation or bulkhead boundary. Asynchronous communication tends to allow for greater fault resiliency. During periods of high demand, they are less likely to stack communications and overrun TCP port limitations. As such, they offer an opportunity for a slow or non-available process or resource to recover and churn through backlogged requests. In this way, for short duration failures customer objectives can be achieved without retries and the associated customer dissatisfaction. Recall from the patterns above that we desire to never have service to service communication due to negative impact to availability. That said, we understand that it is sometimes necessary. In such cases we prefer asynchronous communication for the reasons above. However, we also realize that designing and implementing asynchronous communication often comes at a higher cost as it is more difficult to both envision and design. In some cases, it may not be feasible. Below we’ve listed two patterns that may help in ensuring that services are loosely coupled and that support healthy asynchronous communication capabilities:

Pattern: Smart End Points, Dumb Pipes

Pattern: Claim Check Pattern

- Stateless Services

State costs us in multiple ways:

- Availability – state adds additional parameters and additional computational complexity when maintained on the server. For this reason alone, where state is necessary, we always try to pass the state in the call rather than maintaining it. Further, upon failure, sessions typically have to “retry” and in the extreme case force users to log back in which decreases user satisfaction.

- Cost – state takes memory and therefore adds operational cost. Large state adds more cost than small state.

- Scale – Solutions requiring state not only cost more overall, they cost more to scale and add additional limitations in theoretical maximum scale. Stateful solutions often have lower capacity overall than stateful solutions as corporate budgets are finite, limiting the investment possible to achieve appropriate scale.

- Containerized and Transportable

Containers offer a lot of value to your business. They:

- Allow you to deploy exactly what you test. Assuming that you move the container from dev to appropriate QA environments and subsequently to production you know that what you test is what you will deploy. This in turn reduces the probability that modifications between environments create new defects within your solution and help to increase overall quality.

- Allow easier interoperability regardless of service provider or geography. The solution you deploy should be able to be easily run on any infrastructure as a service provider (IaaS), within your own environments and across the geography easily. The container should have everything your solution needs to execute on similar operating systems and platforms.

- Ephemeral

If you have a pet goldfish and it gets sick, its unlikely you are going to take it to the veterinarian. More likely you will get another goldfish. Contrast this with the concept of a thoroughbred. If your prize thoroughbred turns ill, you are likely to be willing to spend significant money and time nursing it back to health. One of the drivers behind the movement to services disaggregation and microservices is to decrease our reliance on thoroughbreds. Compared to larger services, microservices should be easier to deploy, easier to start, easier to maintain, have faster time to market, and should be easier to learn for new developers. As such, microservices should be low maintenance goldfish and we should all want a majority of our architecture to be comprised of them. Microservices should be built such that upon death, you need only replace it and the replacement should be fast and easy. Because they are comparatively small, time to instantiate should be minimal. Because they have low compute and memory needs, they can run on small VMs. Adopting a principal of assuming they are short lived and will be replaced quickly, while still spending time to reduce the probability of failure gives you both high availability and rapid recovery in the event of a failure.

- Highly Available, Eventually Consistent

Our fault isolation principal above describes the need for asynchronous, non-blocking communication across fault containment/isolation/bulkhead boundaries. Loose coupling further indicates the desire for async communication even within a bulkhead boundary. Broadly speaking, teams should also ensure that when data is needed in more than one place, such data is eventually consistent in recognition of the limitation of Brewer’s CAP Theorem The distributed nature of most microservice implementations requires that data often be in more than one place to avoid anti-patterns such as two services consuming the same data store (the data fuse anti-pattern identified above). To ensure that we don’t “tie” persistence engines together, thereby limiting scale, the movement of data must be done asynchronously without real time guarantees in consistency. Relaxing the consistency constraint engenders greater scale and eliminates synchronous failures between persistence engines . Common cases of eventual consistent behavior include ensuring that search, once loaded from other data stores, isn’t updated in “real time” through multi-phase commit transactions. Similarly, reporting/analytics databases may be minutes out of sync with the primary data stores from which they are fed. The CQRS pattern is one example of how to apply eventual consistency while engendering scale without creating a database fuse.

Pattern: CQRS Pattern

- Design to be Monitored

One of the first architecture principles we identified in the Art of Scalability, and still one of the most widely ignored, is the need to think about how to monitor the efficacy of the solutions we create before we develop them. Too many teams define monitoring after a solution is created, and as a result are left attempting to monitor solutions as if they are black boxes. Are they running? Do they return a response? Further, too few teams fail to monitor critical business metrics (logins, revenue, add to cart, checkouts, tax filings, etc) in real time. As we cover here monitoring activities critical to creating customer value and alerting when their activities are significantly different than expectations is the best way to identify potential problems early. Ensure that your definition of done includes:

- Monitoring for efficacy and usage and comparing it to expected behaviors.

- Identifies critical progress points in execution and logs success or failures for statistical analysis.

- Includes all monitoring to identify and alert upon critical application failures, database response times, and critical infrastructure components including memory, load, cpu utilization, etc.

Embarking on a journey to microservices? AKF Partners has been helping companies implement products through services for well over a decade. Give us a call or shoot us an email - we can help!