In part one of this two-part series, application modernization is defined, its importance and relevance to business objectives are outlined, and the most commonly asked questions by CEO’s have been answered. If you have questions about application modernization as a concept, please read part one and then return here for a step-by-step process to planning and constructing a modernization roadmap.

How to build an application modernization roadmap?

One of the most important aspects of application modernization is ensuring there is alignment of the appropriate steps and objectives throughout all stages and teams involved in the modernization effort. The following outlines the steps necessary to building a successful modernization roadmap:

1. Determine Business Objectives

The first step in building an application modernization roadmap is to determine business objectives and goals. It is important to focus the roadmap on these aligned goals to ensure the desired outcomes are met. Business objectives often include faster time to market and the ability to leverage new technologies that enable new feature functionality (or make it easier to implement).

2. Develop success metrics that are most closely associated with key drivers of your desired Business Outcomes

It is important to agree on metrics that will be used to measure and evaluate the application before, throughout, and after the modernization effort is complete.

Outcome metrics should be mapped to Business Objectives, for example TTM is driven by many of the example metrics below, but primarily Deployment Frequency (DF) and Lead Time for Change (LT4C). Both metrics can be measured as part of an automated Continuous Integration and Continuous Delivery platform (CI/CD).

Ideally the Engineering team takes the initiative to educate Business and Product teams to make sure all stakeholders understand the objective metrics and their alignment to the end customer and business goals. There will be many unforeseen challenges as the organization progresses through an application modernization journey and maintaining alignment towards the future business benefits is critical to keeping the team aligned to a common goal as issues arise.

DevOps Research and Assessment (DORA) metrics contain key indicators that can help evaluate and monitor progress to effectively meet business objectives. The top-level DORA metrics proxy level of innovation and customer quality as follows:

- Deployment Frequency (DF) – how often there is a change in production. More frequent changes indicate faster TTM and a more efficient innovation and learning cycle.

- Lead Time for Changes (LT4C) – the amount of time it takes for a change to occur. Shorter LT4C indicates the total time to make changes in the code is optimal and complexity is ‘manageable.’

- Change Failure Rate (CFR) – the percentage of total changes that are rolled back and/or cause customer issues, which indicates customer quality.

- Mean Time to Repair (MTTR) – the average amount of time it takes to recover from failures.

The first two metrics most closely approximate the level of innovation and the ability to for scrum teams to innovate quickly. The second two metrics are proxy for customer quality. It is important to remember that at the end of the day the only thing that matters is customer quality. The rest of the quality cycle is important, however, from a customer perspective experiencing good quality when engaging with your organization’s website and product are the most important aspects.

3. Develop the Future State Architecture and Path to Implement

After aligning on key outcomes and metrics, it’s time to define the future state and the path to experimentation, learning and implementation. This phase involves iterations between analyzing existing architecture, defining future architecture, and performing experiments and proofs of concept (PoCs) to confirm that the new architecture and path to its implementation are optimal.

The analysis and design phase has three main swim lanes which can be worked in parallel:

- Analyzing the current platform

- Develop a common understanding of product domains that will drive architecture and organization in the future (example domains: checkout, search, login etc.)

- Exploring and developing your ‘generic’ architecture components (e.g., how services are designed and interact, common components that will be used universally such as Docker, K8, API gateways etc.)

Analyzing the Current Platform

An analysis of the current platform is necessary to inform and subsequently prove the future target architecture is appropriate. This involves analyzing the complexity and dependencies that will need to be broken to create a distributed architecture that allows autonomous innovation at the component or domain level.

Example activities that are often part of the current platform analysis include:

- An in-depth analysis of the database(s), focused on tables that are most heavily utilized. For example, in an ecommerce application this may include functionality related to core item data which is accessed for both listing/adding to inventory as well as buying.

- This analysis informs future data and domain structure (the groupings of functionality that will align to future independent smaller applications/services that will align to engineering teams) and highlights the most complex and interdependent parts of the current application

- Analysis of which parts of the code are most frequently changed

- Determine the parts of the application that could potentially be retained and abstracted from the new architecture

- Beginning to develop a migration strategy

Developing a Common Understanding of the Future Domains

It is important to determine the future domains which is a critical and often underestimated step. This can be done through event storming and domain-driven design (DDD). Domains are a common term applied to splitting a larger application into smaller distributed components. Domains are the foundation that will drive the number and type of services that each scrum team will own as the monolith is decomposed. Domain-driven design is an effective approach because it makes communication (& common understanding of the architecture) easier, improves flexibility and efficient discussion regarding how to group pieces of functionality and align the future distributed architecture to autonomous scrum teams. Event storming is a particular method used to achieve domain-driven design that is fast, effective at group modeling and used to develop a team understanding of the domains.

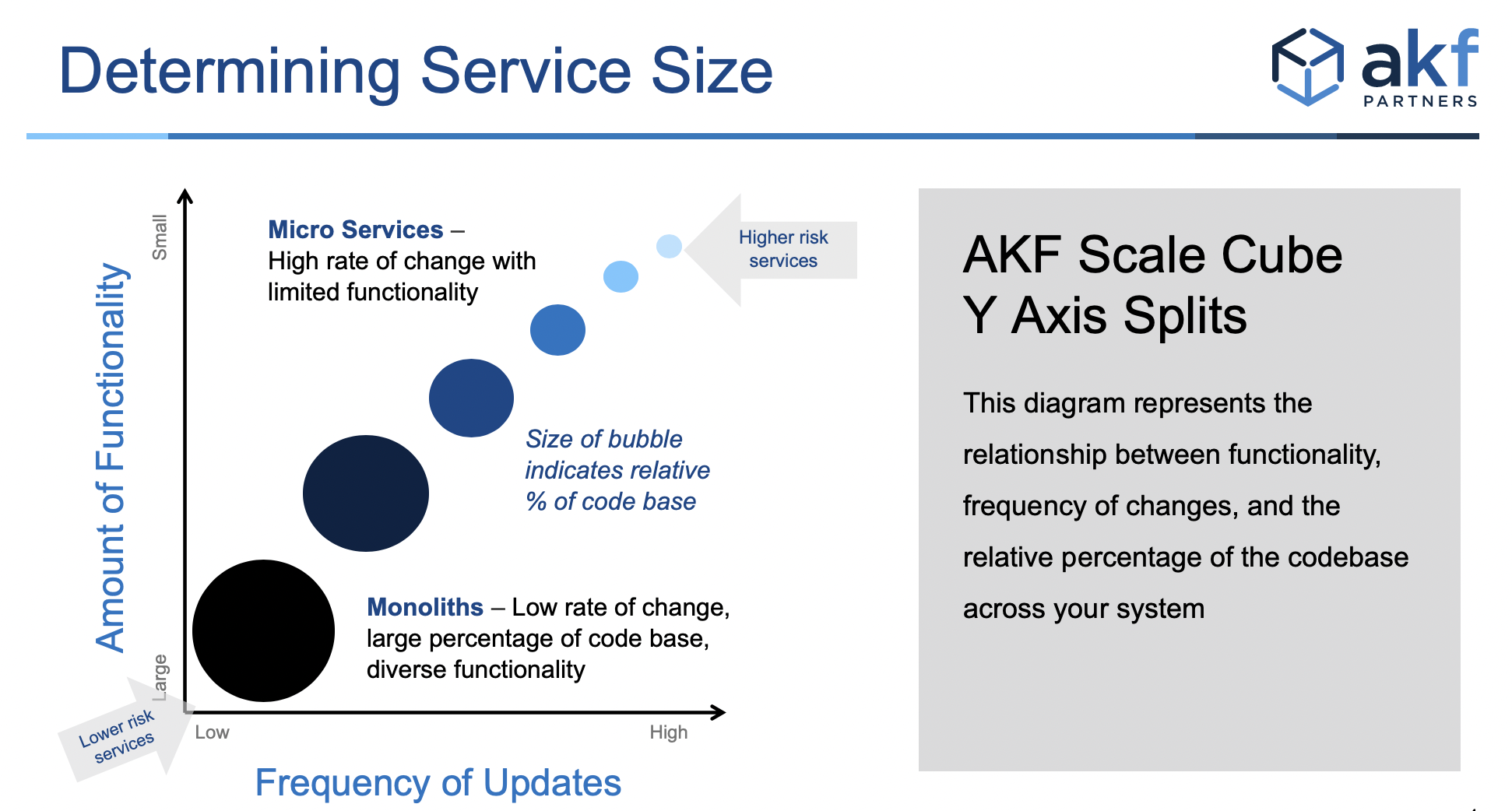

These domains (or services) are generally aligned to the y-axis of the scale cube which is split by function, service, or resource. It is important that the a given service is only owned by one team. Teams can be responsible for multiple services, but you do not want to go too granular, and it is best to find a balance between having each team solely responsible for one or a few services. Once you have established the future domains and teams are aligned on who is responsible for which service it is time to move to the next step. It should be noted that the process of team alignment may take months.

Developing the “Generic” Future Target Architecture

This phase involves developing the generic building blocks that the teams will use, as well as the design patterns and standards for how the components of the future state architecture will work together.

Following is a (non-exhaustive) list of common activities and decisions that are part of this phase:

- Developing architectural principles that lead to standards and down to the implementation approach level (e.g., Service design standards, coding standards).

- Selecting specific components such as programming languages and databases for various use cases. In scaled organizations, teams may be able to choose between 2-3 options for a given use case, but the level of standardization should be appropriate for the size of the team.

- Determining build vs buy philosophy and applying it to foundational components. For example, normally an API gateway would be an off the shelf component that would be selected and used across the new architecture.

In general, this phase involves defining all the components and approaches that will be all or partially common across the individual scrum teams, which is everything from programming languages to CI/CD tools/automation and through to logging, monitoring, and managing the application in production.

4. Iteration/Negotiation Phase

This phase typically involves iterations, where insights gained from analyzing the existing architecture help guide the development of the optimal future architecture. These iterations also flow from top to bottom and bottom to top within the organization to set the initial pacing of progress, allocated engineering capacity and macro level prioritization.

That is, once the top-level analysis is done, the leadership team should review within the monolith which teams own which parts (specifically which business logic is being changed/managed primarily by which teams). After parts of the monolith are ‘assigned’ to specific teams, the scrum teams need to work locally on their proposed separation approach and their proposed pacing of progress. Of course, if a team comes back and says, we will do this in the next 15 years, then the negotiation begins. Ideally, the leadership team sets a stake in the ground for teams to work towards and then the iterations begin as teams come back with proposals that are shorter or longer, I.e., it would be typical for senior management to set a high-level target “separate 30% of functionality out of the monolith in Year 1, as defined by XYZ measurements.”

In this iteration phase, it’s important to also consider dependencies where possible. For example, there may be work that is very risky to start on until other parts of the monolith are separated. These dependencies should be highlighted wherever possible as part of the monolith analysis in the preceding phase.

Following are key dimensions that should be considered in prioritizing and pacing modernization work, especially at the beginning of the effort:

- Business Impact – obviously the whole purpose of modernization is to benefit the business, usually with a faster pace of innovation, BUT in the early phases of modernization business benefit must be heavily weighed against the risk of customer impacting incidents until enough learning is gained on the challenges of separation in the code base as well as proving out the new architecture’s building blocks.

- Risk – as described above, generally you want to start by separating out lower risk parts of the existing application. Lower risk means parts of the application that are either less heavily used or less complex in terms of their complexity in the monolith, or a combination of both. Once lower risk areas are successfully separated, the learnings can be used to move to more complex parts of the legacy code.

- Opportunity for Learning – especially in earlier phases of a modernization effort it’s important to prioritize the learning to be gained by separating different portions. For example, ideally in the initial phases of execution you choose components to prioritize that will help you understand complexity/dependencies in more detail with minimum risk to customers as the new architecture evolves. Consider learning along the dimensions of the foundational ‘generic’ parts of the architecture (e.g., CI/CD pipeline, API gateway, event driven architecture) as well as the complexities and dependencies of breaking down the existing monolith itself.

As previously outlined, negotiation between the macro-organization level and the team level is iterative. This phase should be clearly timeboxed, else it can drag on too long and impact momentum. Senior management should give clear direction about high level targets and scope for each team but be flexible as new information arises (about complexity, new issues/dependencies etc.). Often the deadline for this planning, pacing and negotiation phase can be driven by planning cycles that are set at the corporate level.

5. Execution/Monitoring/Adjusting Phase

The final stage of the modernization process includes executing the roadmap, monitoring, and adjusting based on feedback and progress. There are a few key steps in this stage as follows:

- Tracking Progress to determine how the overall modernization effort and individual teams are progressing and adjust as necessary. There are a few dimensions to tracking progress which include:

- Monitoring production traffic directed towards both the monolith and the new code base, as traffic is introduced to the new services and being scaled down on the monolith.

- Monitoring the monolith to ensure new tables are not being added. Adding new tables to the legacy monolith usually indicates that new features are being added to the monolith.

- Tables should only be added to the monolith for the purpose of staging data for migration to the new datastore(s) or as an exception that is reviewed and approved as a deliberate ‘conscious decision’ (for TTM critical functionality additions).

- Continuing to add to the monolith or legacy code base obviously adds further technical debt and should not be easily approved after the modernization is underway.

- Monitor Engineering Capacity (e.g., monitoring time, story points etc.) that is applied to modernization. Like most of the execution phase activities, this is even more important in the early phases when it is critical to maintain momentum to continue providing support for the initiative. Often engineering teams allocate an appropriate level of effort/capacity to modernization in their planning, but the moment a new feature need is identified modernization is the first initiative to be deprioritized.

- Establish, Monitor, and Enforce Architecture Guidelines that teams can follow to ensure best practices are consistently applied as the new architecture is implemented.

- Reporting Against Benefit/Outcome Metrics to all key stakeholders including the Business Celebrating progress VERY visibly and vocally. Especially in the early phases of a concerted modernization effort, celebrating progress is critical to maintaining momentum. If Engineering, Product or Business stakeholders don’t understand the progress and benefits being realized, the whole effort is at risk. Once momentum is established, this risk is reduced.

We routinely work with companies undergoing application modernization efforts and can help provide tailored guidance throughout all and any stages of the process. If you need assistance please contact us; we can help!