Time it takes to boil an egg: 720,000 milliseconds

Average time in line at the supermarket: 240,000 milliseconds

Time it takes to brush your teeth: 120,000 milliseconds

Time it takes to make a sandwich: 90,000 milliseconds

In our everyday lives we aren’t used to measuring things in milliseconds. In the software world our users’ expectations are different. Milliseconds matter. A lot.

The average person may wait 240,000 milliseconds to checkout at the grocery store but not as likely to wait that long to checkout on an e-commerce site.

What Is Latency

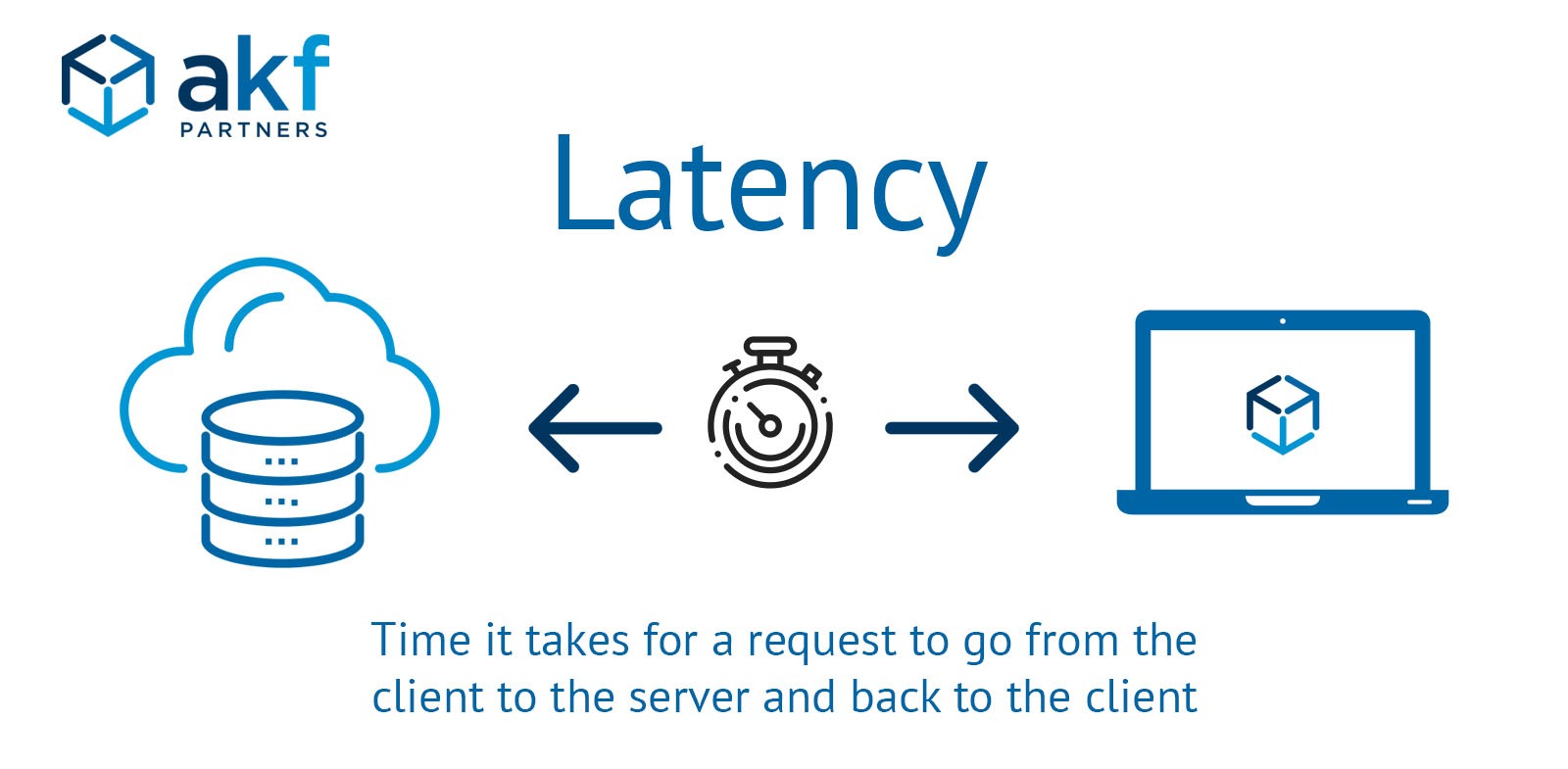

Latency is how fast we get an answer back to after making a request to the server.

It’s how long it takes for a request to go from the browser to the server and back to the browser.

Latency vs Bandwidth

I often see the words latency and bandwidth used together – or even interchangeably – but they have two very different meanings.

Using the metaphor of a restaurant, bandwidth is the amount of seating available. The more seating the restaurant has, the more people it can serve at one time. If a restaurant wants to be able to serve more people in a certain time period they add more seating. Similarly, bandwidth is the maximum amount of data that can be transferred in a specific measure of time.

If bandwidth is the maximum number of diners that can fit in a restaurant at one time, then latency is the amount of time it takes for food to arrive after ordering. On the Internet, latency is a measure of how long it takes for a user to get a response from an action like a click. It is the “performance lag” the user feels while using our product.

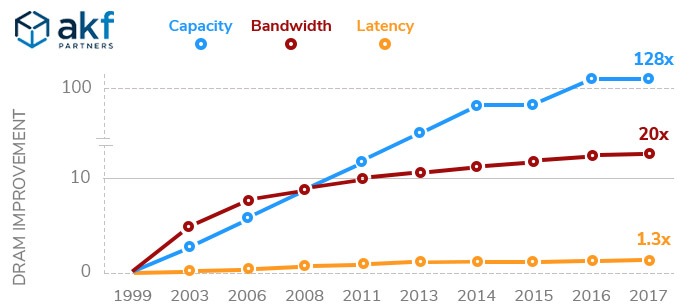

Luckily, over the past 20 years, the bandwidth and capacity of memory have increased dramatically. Unfortunately, latency hasn't increased at all comparatively over the last 20 years.

Latency is directly linked to the “experience” the end user has with our products or services. If our latency isn’t maximized then we are leaving money on the table!

- Amazon did a study that found for every 100ms of latency it cost them 1% in sales.

- Google discovered that for every 500ms they took to show search results, traffic dropped 20%.

Even more shocking is a study done by the TABB Group. The study estimated the outcome of a broker’s electronic trading platform being just 5 milliseconds behind the competition. According to their estimate, this 5 millisecond delay could cost $4 million in revenue per millisecond. Their study also concluded that if an electronic broker is 100 milliseconds behind the competition they might as well shut down and become a floor broker.

100ms can be the difference between strategic advantage and second or third place.

100ms Rule of Latency – Paul Buchheit (Gmail Creator)

How fast is 100ms? Paul Buchheit coined the The 100ms Rule. The rule states that every interaction should be faster than 100ms. Why? 100ms is the threshold “where interactions feel instantaneous.”

What Causes Latency

Finding the cause of all of our latency isn’t always an easy task. There are a lot of possible causes. For the most part we can borrow the Pareto Principle (80/20 Rule) and knock out the usual suspects.

Propagation

Propagation is how long it takes information to travel. In a perfect world our request travels at the speed of light. Also in a perfect world milkshakes would be good for us. For various reasons, our packet won’t travel at the speed of light.

Even if it did travel at the speed of light, distance from between our server and our web user still matters.

Packets traveling from one side of the world to the other and back would add about 250ms of latency. Unfortunately our data doesn’t travel “as the crow flies.” The paths rarely travel in a straight line (especially if using a VPN). This adds a lot more distance for the request to travel.

Transmission Mediums

Remember when I said it wasn’t a perfect world? This is what I was talking about. The material data cables are made out of affect the speed of propagation. Different materials have different limitations on the speed.

For example, the speed of light can travel from New York to San Francisco in 14ms (in a vacuum). Inside of a fiber cable it takes about 21ms.

For the most part, data travels fast across long distances. The cabling mediums between larger distances is usually faster. The last mile is usually the slowest. One reason for this is the cable medium used in buildings, homes, and commercial areas tend to use existing wiring like coaxial cables or copper. Another reason is explained in the next point. Your data changes hands as it gets back to you (i.e. your router).

Consider yourself lucky if you have fiber installed. Copper and coaxial cables are slower. 4G can add up to 100ms to the latency. We won’t even talk about satellite.

Network Hops

It would be great if our data went straight from our device to the server and back, but again, probably not going to happen. As our packet travels to the server and back to the source it travels through different network devices. The request passes through routers, bridges, and gateways. Each time our data is handed off to the next device, a “network hop” occurs.

These hops add more latency than distance. A request that travels 100 miles but makes 5 hops will have more latency than a request that travels 2500 miles with only 2 hops.

The more hops are in the line, the more latency.

How To Lower Latency?

Latency can best be described as the sum of the previously mentioned causes and a lot more. There is no magical button we can push to achieve ultra low latency. There are a few things that can make a big dent. This is in no means an exhaustive list.

Asynchronous Development Approach

Multitasking as a developer is a bad idea. Making software multitask is a great idea. Whenever possible, make calls asynchronous (multiple calls executed at the same time). This can make a huge difference in latency (and perceived latency which can be just as important, we’ll talk more about that shortly).

Make Fewer External Requests

If we know that the trip to and from the database adds latency, then let’s go less often. There are many ways we can do this. Here are a few:

- Use image sprites

- Eliminate images that don’t contribute to overall product

- Use inline svg code instead of images for icons and logos

- Combine and minify all HTML, CSS, and JS files

There are times we need to reference files from with an external HTTP request. If we don’t control those resources then there is little we can do. We can, however, evaluate and reduce the number of external services we use.

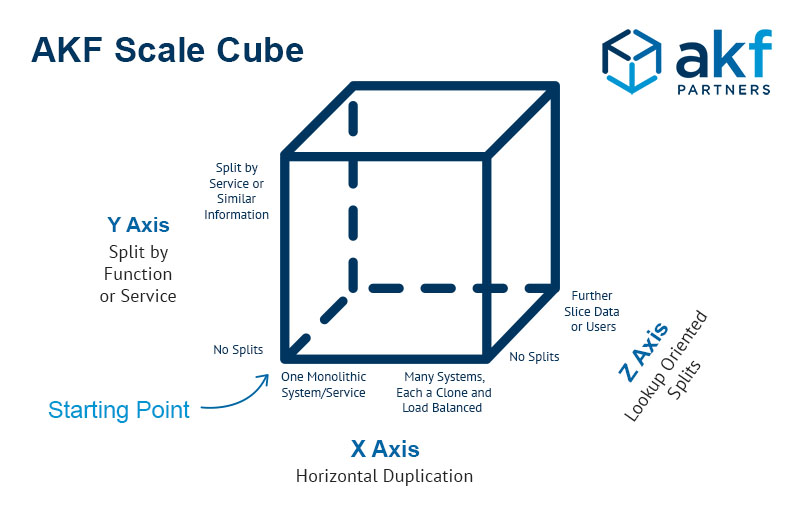

Z Axis split geographically

One of the things AKF Partners is known for is the Scalability Cube. We have helped hundreds of clients scale along all three axises.

If our architecture makes sense to do so, splitting along the Z Axis by geography can make a huge difference in latency. Separating the data based on the geographical location hopefully places servers closer to the end user, thus shortening the round trip. If you would like an evaluation to see if this is something that could be achieved with your current architecture, don’t hesitate to contact us.

Use a CDN

A CDN is a content delivery network. Basically it is a system of distributed servers. These servers deliver pages or other content to end users based on their geographic location. Remember shorter distances mean lower latency.

A CDN can have a huge impact on latency. I stole a few figures from the KeyCDN website to show you how big of a difference. Test site was located in Dallas, TX.

| Server Location | No CDN (ms) | With CDN (ms) | Difference % |

|---|---|---|---|

| New York | 36.908 | 18.069 | -50.97% |

| San Francisco | 39.645 | 18.900 | -52.33% |

| Frankfurt | 123.072 | 3.734 | -96.97% |

| London | 127.555 | 4.548 | -96.43% |

| Tokyo | 130.804 | 3.379 | -97.42% |

A content delivery network can have a major impact in reducing latency.

Caching

The details of how caching works can be complicated but the basic idea is simple. If I were to ask you what the result of 8 x 7 is, you will know right away the answer is 56. You didn’t have to think about it. You didn’t calculate it in your head. You’ve done this multiplication so many times in your life that you don’t need to. You just remember the answer. That is kind of how caching works.

If we are set up to cache a page on the server, the first time someone visits our page, it loads normally because the request is received by the server, processed, and sent back to the client as an html file. If we are set up to cache the request, then the HTML file is saved and stored - usually in RAM (which is fast). The next time we make the same request, the server doesn’t need to process anything. It simply serves the HTML page from the cache.

We can also cache at the browser level. The first time a user visits a site, the browser will receive a bunch of assets and (if set up correctly) will cache those assets. The next time the site is visited, the browser will serve the cached assets and it will load a lot quicker.

We decide when these caches expire. Be aggressive with caching of static resources. Set the expiration date for a minimum of a month in advance. I recommend setting them for 12 months if it makes sense for that resource.

Render Content on the Server Side

Rendering templates on the server (rather than dynamically on the client) can also help lower latency. Remember every trip to the database results in higher total latency. Why not pre-render the pages on the server and load the static pages on the client?

This technique doesn’t work for all applications – but content publishing sites like The Washington Post or Medium can benefit greatly from pre-rendering their content on the server side and posting static rendered content on the client.

Use Pre-fetching Methods

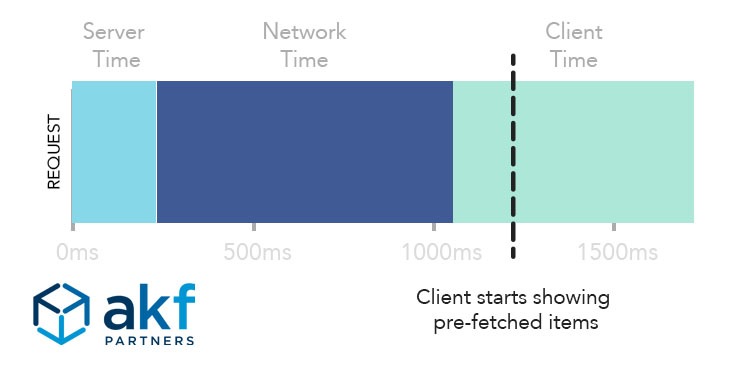

I almost didn’t include this tip. Technically this doesn’t lower latency at all. What it does do is lower the “perceived user latency” felt by customers.

Perceived user latency is how long it seems like it takes to the user.

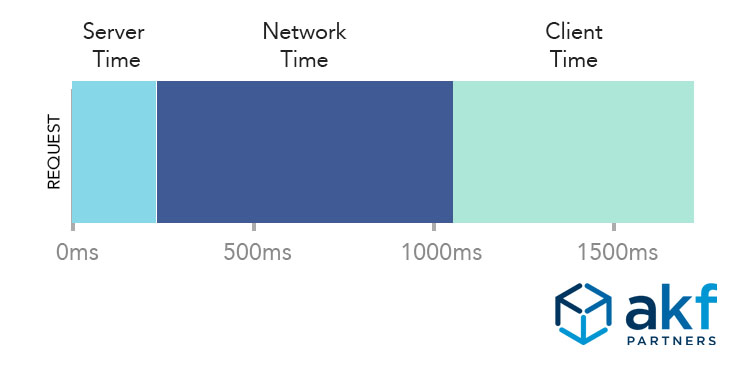

A normal request looks like the graphic below. First is the time it takes the server to process the request. After that is the time it takes the network to get the request back to the client. Next is the time it takes the client to process the request and load the page.

If we pre-fetch certain items or show placeholder images while the response from the server is loading (a la Facebook) then the perceived latency felt by the user is lower. The actual latency is exactly the same but the user “feels” like it loaded faster.

We can get the same benefits of a lower latency by “gaming” latency this way.

Latency: Summary

Latency is a metric we should all be tracking. Providing a great user experience with low latency makes a difference. It keeps our customers on our applications and sites longer. It fosters retention. Most importantly it will increase conversion rate.

If you aren’t currently tracking your latency as a metric, take that first step and see where you are at. If you need help, let us know and we’d be happy to schedule a call.