We are commonly asked questions similar to this:

“My company has doubled the size of the development team over the last couple of years, yet the development team seems to get slower as we get larger. Why does my velocity decrease as I increase the size of my team?”

Causes of Declining Velocity over Time

First, it’s important to understand that velocity is inversely correlated with time even when we keep team size constant. Put another way, given constant effort of a consistent team size to an application over time, velocity will decrease absent other intervening efforts. Why is this? And what intervening efforts can help offset this decrease in velocity? Let’s discuss the largest factors decreasing velocity first.

- Technology: Cyclomatic Complexity

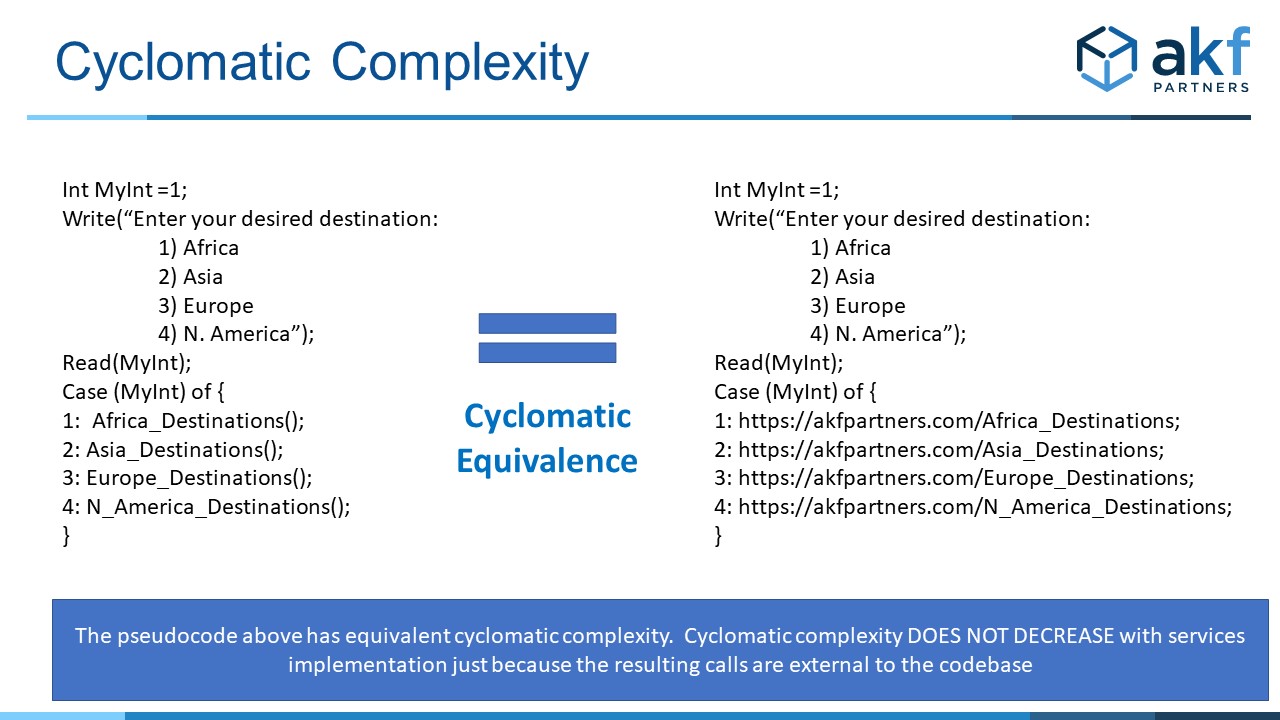

Let’s take the simplest and smallest of team sizes – a team of a single individual named Mary. As Mary works on an application over time, it grows both in size and more importantly cyclomatic complexity. Cyclomatic complexity is one measure of software complexity that evaluates the number of independent paths through a code base. As the cyclomatic complexity increases, Mary finds it harder and more difficult to add new functionality. As a result, as a code base increases in size so does the likely cyclomatic complexity. As the cyclomatic complexity increases, the difficulty of adding new functionality also increases. As the difficulty increases, velocity (throughput) decreases.

- Organization: Team Size

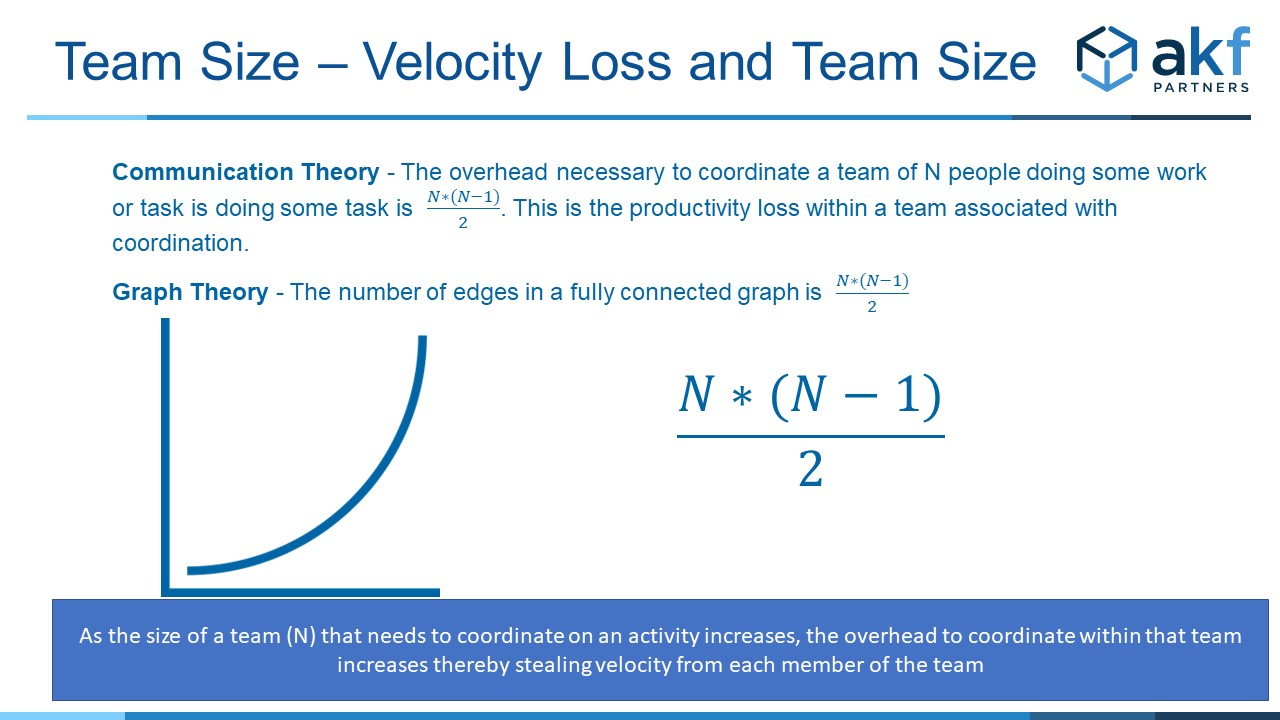

The size of a team working on a single codebase (keep single codebase in mind) negatively impacts the velocity of each member within the team. This doesn’t mean that the overall team velocity decreases, but rather that each person becomes slightly less productive with each new addition to the team. If a single person is on a team and their baseline productivity is 1.0, adding a new person to the team might make each of the two people on the team’s productivity .99 for a combined productivity of 1.98. The team overall is more productive, but individual productivity has reduced.

This loss in productivity is a very well researched phenomenon caused by the need to coordinate between individuals. It isn’t just a software phenomenon; it exists in every team of any size that must coordinate and cooperate to achieve an outcome. First described within software by Mel Conway in his paper “How do committees invent” , and leveraging concepts from both graph theory and communication theory, the decline in a team of size N is asymptotically a square of the team size (N2) and is represented by the following equation:

- Process: Process Bottlenecks

Commonly, as teams grow, they also outgrow processes put in place to aid quality, scale and sometimes even time to market. Smaller teams (of less than 12 people) commonly funnel major architectural decisions through a single person to ensure consistency with standards. As a team grows, say from 12 people to 24, the burden on that lead engineer or CTO becomes larger. At 48 people, the CTO is likely to become a bottleneck, artificially depressing organizational velocity due to stalls in the development pipeline looking for approval.

The common fix here is to assemble a team of architects with shared standards to aid with such reviews. But even such a monolithic team review process can cause small stalls in a pipeline waiting for meetings to be scheduled or waiting for the availability of a single architect to review an initiative. As is the case with the scalability and response time of a monolithic single point of failure within an architecture that constrains growth or increases response time, so too is it the case of a monolithic organization or person constraining throughput. Other examples of process bottlenecks include shared CI/CD pipelines, shared and constrained build and test environments and other shared process components that can become contentious and create throughput pipeline stall events.

Fixes to Declining Velocity

- Technology: Develop Services but BE CAREFUL:

The fix for cyclomatic complexity and its associated impact on decreasing velocity is to develop services instead of monoliths. But monolith decomposition (or alternatively for new development the development of new microservices) alone does not guarantee a reduction in cyclomatic complexity. When services rely on other services through restful calls, remote method invocation, remote procedure calls or even through pub/sub in through an asynchronous bus, cyclomatic complexity does NOT go down. Put another way, when a decision to branch is made, the resulting fork increases cyclomatic complexity whether that call is within the same codebase or made to another service.

To reduce cyclomatic complexity, we must ensure that services do not rely on each other – that one service does not “call” or rely on another service. Doing so increases the cognitive load on the engineer – in essence tying the codebases together which in turn makes changes more difficult. When changes are more difficult, velocity decreases as each change becomes slower to implement. As such, for greatest velocity, whether with a single engineer or a very large team, services should be developed to start and complete an operation without needing other services for interaction.

Ensuring that services aren’t tightly coupled and can perform a function without needing other services does more than just increase development velocity, it also increases the availability and scalability of a product. For more information on these benefits, peruse our articles on patterns and anti-patterns within microservices.

- Team Size and Construction

As we indicated earlier, as a team coordinating in a single code base increases in size, so does the velocity of individual engineers decrease. This decrease is a result of the intragroup coordination necessary to develop, build, QA and release the software and associated data structures.

To help offset this reduction in velocity, we must implement two principles:

- Constrain team sizes to no more than 15 people

Mel Conway recommended no more than 8 people to a team. Robin Dunbar’s research into team sizes indicates that 15 is an absolute upper bound for the size of any team that needs to coordinate on an initiative. But constraining team size alone won’t get you to maximum velocity, you also need to ensure that the architecture and org structure allows for autonomous teams with low coordination. An interesting side note is that Amazon’s famous 2 pizza teams aren’t an Amazon creation, but rather an implementation of Mel Conway and Fred Brooks’ (of Mythical Man Month fame) advice.

- Engender Ownership and Limit Necessary Coordination

Given that team coordination is one of the primary drivers of velocity loss within teams, we must limit the coordination activities between teams. This recommendation requires a mix of architecture and organization structure.

Our architectural implementation must limit interactions between services owned by different teams and must limit shared use of common database schemas between teams. Each synchronous interaction increases the coordination necessary between teams and therefore decreases potential velocity. As interactions or coupling increases, so too does the need for changes to those interactions over time. Further, shared data entities also require coordination for schema changes, changes to contextual meaning, etc.

Abiding by these architectural principles for microservices helps to reduce this interaction and therefore maximize velocity within teams.

- Constrain team sizes to no more than 15 people

- Eliminate Process Bottlenecks

No process we’ve ever met meets the needs of every company. As teams grow, processes can either reduce or enable velocity. Processes that force development teams through toll gates implemented by a single person or monolithic organization (e.g. an architecture team conducting architecture review boards) can add delays to the delivery of features as those features wait to be reviewed for hours, days or weeks.

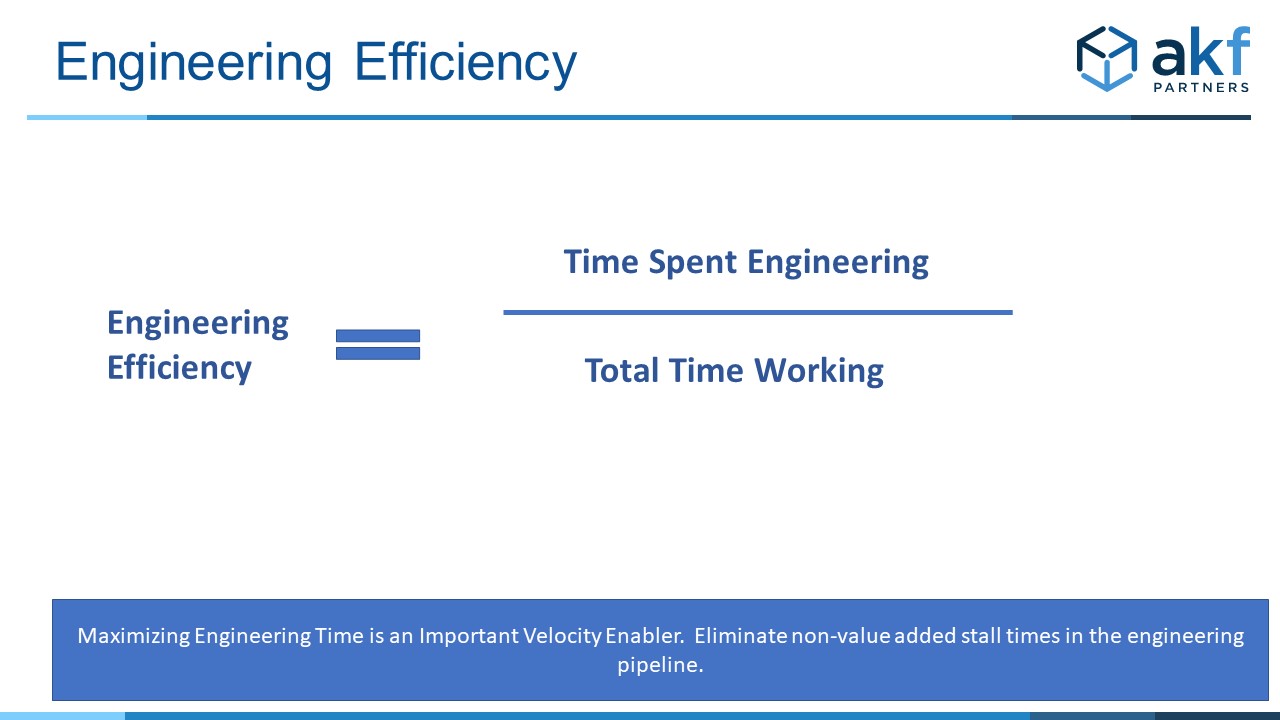

Borrowing from the practices of industrial engineering, we often perform time studies for our clients to find where engineers are spending their time. The idea is to focus on maximizing “engineering” time over “non-engineering” time. Consider the following equation:

Very often when we run these studies, we find that engineers spend significantly less than 50% of their time performing engineering tasks including:

- Designing

- Coding

- Building

- Unit Tests

- Defect Fixing

- Agile Ceremonies

The most common “stalls” or velocity decreasing events that steal value from the numerator include:

- Waiting for project owner responses

- Waiting for build environments

- Waiting for test environments

- Non-engineering specific meetings – or too many meetings

- Waiting for architectural reviews

- Waiting for upper management reviews

Failed or ineffective processes are commonly the causes of these stalls. For instance:

- Product owners not participating in standups and other ceremonies

- Centralized teams responsible for build, CI/CD, and test environment development over engineers being able to spin them up with infrastructure as code.

- Everyone being invited to meetings, or a culture that incents Agile Chicken behavior over Agile Pig behavior.

- Architecture review board processes that may “stall” deployments for review coordination (note – best to embed architects into the teams for real time reviews to eliminate stalls and coordination).

- Top heavy organizations that favor micromanagement over empowerment and accountability.

For more on team construction to enable higher levels of innovation and velocity, read our white paper.

AKF Partners has helped hundreds of customers increase their team velocity and decrease the cost of development. Give us a call, we can help.