The Scalability Cube – Your Guide to Evaluating Scalability

Perhaps the most common question we get at AKF Partners when performing technical due diligence on a company is, “Will this thing scale?” After all, investors want to see a return on their investment in a company, and a common way to achieve that is to grow the number of users on an application or platform. How do they ensure that the technology can support that growth? By evaluating scalability.

Let’s start by defining scalability from the technical perspective. The Wikipedia definition of “scalability” is the capability of a system, network, or process to handle a growing amount of work, or its potential to be enlarged to accommodate that growth. That definition is accurate when applied to common investment objectives. The question is, what are the key attributes of software that allow it to scale, along with the anti-patterns that prevent scaling? Or, in other words, what do we look for at AKF Partners when determining scalability?

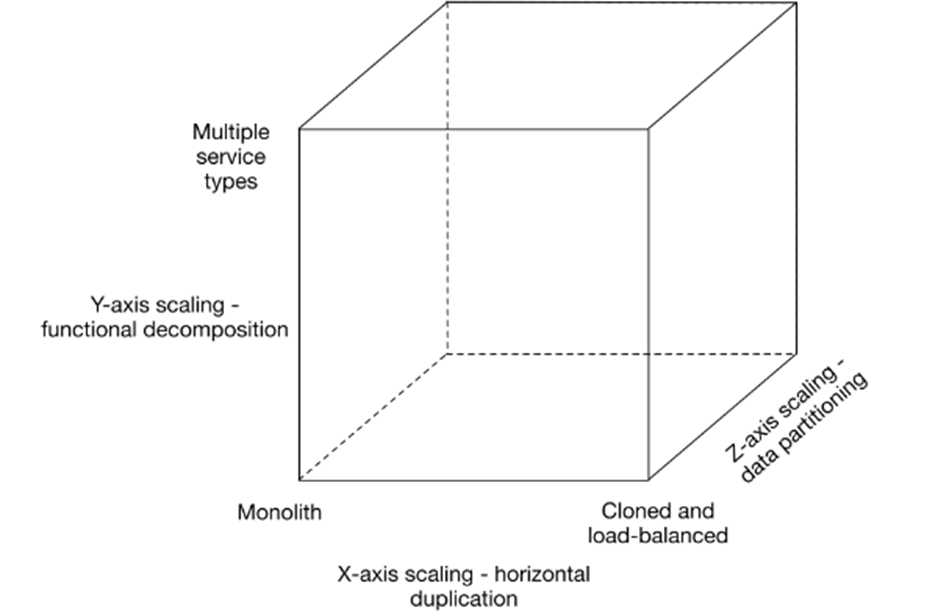

While an exhaustive list is beyond the scope of this blog post, we can quickly use the Scalability Cube and apply the analytical methodology that helps us quickly determine where the application will experience issues.

AKF Partners introduced the scalability cube, a scale design model for building resilience application architectures using patterns and practices that apply broadly to any application. This is a best practices model that describes all scale dimensions from “The Art of Scalability” book (AKF Partners – Abbot, Keeven & Fisher Partners).

The "Scale Cube" is composed of an X-Axis, Y-Axis, and Z-Axis:

1. Technical Architectural Layering (X-Axis ) – No single points of failure. Duplicate everything.

2. Functional Decomposition Segmentation – Componentization to Modules & Microservices (Y-Axis). Split Report, Message, Locate, Forms, Calendar into fault isolated swim lanes.

3. Horizontal Data Partitioning - Shards (Z-Axis). Beginning with pilot users, start with “podding” users for scalability and availability.

Figure 1

The Scale Cube helps teams keep critical dimensions of system scale in mind when solutions are designed. Scalability is all about the capability of a design to support ever growing client traffic without compromising performance. It is important to understand there are no “silver bullets” in designing scalable solutions.

An architecture is scalable if each layer in the multi-layered architecture is scalable. For example, a well-designed application should be able to scale seamlessly as demand increases and decreases and be resilient enough to withstand the loss of one or more computer resources.

Let’s start by looking at the typical monolithic application. A large system that must be deployed holistically is difficult to scale. In the case where your application was designed to be stateless, scale is possible by adding more machines, virtual or physical. However, adding instances requires powerful machines that are not cost-effective to scale. Additionally, you have the added risk of extensive regression testing because you cannot update small components on their own. Instead, we recommend a microservices-based architecture using containers (e.g. Docker) that allows for independent deployment of small pieces and the scale of individual services instead of one big application.

Monolithic applications have other negative effects, such as development complexity. What is “development complexity”? As more developers are added to the team, be aware of the effects suffering from Brooks’ Law. Brooks’ law states that adding more software developers to a late project makes the project even later. For example, one large solution loaded in the development environment can slow down a developer and gets worse as more developers add components. This causes slower and slower load times on development machines, and developers stomping on each other with changes (or creating complex merges) as they modify the same files.

Another example of development complexity issue is large outdated pieces of the architecture or database where one person is an expert. That person becomes a bottleneck to changes in a specific part of the system. As well, they are now a SPOF (single point of failure) if they are the only resource that understands the monolithic beast. The monolithic complexity and the rate of code change make it hard for any developer to know all the idiosyncrasies of the system, hence more defects are introduced. A decoupled system with small components helps prevents this problem.

When validating database design for appropriate scale, there are some key anti-patterns to check. For example:

• Do synchronous database accesses block other connections to the database when retrieving or writing data? This design can end up blocking queries and holding up the application.

• Are queries written efficiently? Large data footprints, with significant locking, can quickly slow database performance to a crawl.

• Is there a heavy report function in the application that relies on a single transactional database? Report generation can severely hamper the performance of critical user scenarios. Separating out read-only data from read-write data can positively improve scale.

• Can the data be partitioned across different load databases and/or database servers (sharding)? For example, Customers in different geographies may be partitioned to various servers more compatible with their locations. In turn, separating out the data allows for enhanced scale since requests can be split out.

• Is the right database technology being used for the problem? Storing BLOBs in a relational database has negative effects – instead, use the right technology for the job, such as a NoSQL document store. Forcing less structured data into a relational database can also lead to waste and performance issues, and here, a NoSQL solution may be more suitable.

We also look for mixed presentation and business logic. A software anti-pattern that can be prevalent in legacy code is not separating out the UI code from the underlying logic. This practice makes it impossible to scale individual layers of the application and takes away the capability to easily do A/B testing to validate different UI changes. Layer separation allows putting just enough hardware against each layer for more minimal resource usage and overall cost efficiency. The separation of the business logic from SPROCs (stored procedures) also improves the maintainability and scalability of the system.

Another key area we dig for is stateful application servers. Designing an application that stores state on an individual server is problematic for scalability. For example, if some business logic runs on one server and stores user session information (or other data) in a cache on only one server, all user requests must use that same server instead of a generic machine in a cluster. This prevents adding new machine instances that can field any request that a load balancer passes its way. Caching is a great practice for performance, but it cannot interfere with horizontal scale.

Finally, long-running jobs and/or synchronous dependencies are key areas for scalability issues. Actions on the system that trigger processing times of minutes or more can affect scalability (e.g. execution of a report that requires large amounts of data to generate). Continuing to add machines to the set doesn’t help the problem as the system can never keep up in the presence of many requests. Blocking operations exasperate the problem. Look for solutions that queue up long-running requests, execute them in the background, send events when they are complete (asynchronous communication) and do not tie up key application and database servers. Communication with dependent systems for long-running requests using synchronous methods also affects performance, scale, and reliability. Common solutions for intersystem communication and asynchronous messaging include RabbitMQ and Kafka.

Again, the list above is not exhaustive but outlines some key areas that AKF Partners look for when evaluating an architecture for scalability. If you’re looking for a checklist to help you perform your own diligence, feel free to use ours. If you’re wondering more about our diligence practice, you may be interested in our thoughts on best practices, or our beliefs around diligence and how to get it right. We’ve performed technical diligence for seed rounds, A-series and beyond, carve-outs, strategic investments and taking public companies private. From $5 million invested to over $1 billion. No matter the size of company or size of the investment, we can help.