Every wonder what really makes a website tick? Or why some sites seem slower or faster than expected? Well, rest assured, there are straight-forward answers. Today we are going to talk more about improving website performance.

This article extends our previous discussion on reducing DNS lookups. Here we will review the tradeoffs that affect website performance. When finished, you should have a better understanding of how to improve your own site.

This article is based on Rule 5 of Scalability Rules by Marty Abbott and Mike Fisher.

WHAT AFFECTS A WEBSITE’S PERFORMANCE?

Websites consist of many different objects (HTML, CSS, Javascript, images, and so on). The responsiveness of a website depends largely on the number and size of objects the site requires. Browsers download these objects to make the site usable and interesting. The time it takes to download the objects is the visible delay to the user.

Reducing the size and number of objects will improve site performance and scalability. As discussed in Rule 4, reducing DNS Lookups will also improve site performance.

If we focus only on trimming objects and reducing servers we may not end up with something valuable to our users. Rather we may end up with a website reminding us of the early 1990s with no images, no animations — just text.

Fast? Yes.

Valuable? Not so much.

In competition with the speed of a site, a website must provide valuable and useful content.

The balance that must take place, is to provide the content the user needs, as simply and minimally as possible.

To see your own sites performance, visit GTmetrix.com. Enter a website of interest and on the results page, you'll be looking under the Page Details section for Total Page Size and Requests. A fast page is usually found under 2 MB of total page size and under 40 requests.

IMPROVING PERFORMANCE

When we talk about improving performance, page weight is an important element. It is the overall size in terms of bytes of the page and the objects that constitute that page. In general, lean/small pages are almost always faster than heavy/rich pages.

Even though bandwidth has gone up over time and more content could be delivered, it is wise to make a page as light as possible. The crispness and responsiveness of a website will provide a better experience and help retain users.

Here are some ways to reduce page weight and increase performance.

Simultaneous downloads

One way to improve site performance is with simultaneous downloads. In contrast to one-at-a-time downloads, browsers have the ability to download objects simultaneously.

The Http 1.1 protocol states that clients should not maintain more than two connections with any server. Going beyond the minimum, most modern browsers allow at least six simultaneous downloads.

If objects can be split into smaller objects and served in parallel, the site will have better performance.

IMAGES

Images are often the largest objects on a site. They are usually orders of magnitude larger than code or text files. From a speed perspective, we’d like to avoid images. From a user engagement perspective, we know we need them.

So, what do we do?

Lower Resolution

Lower resolution images are smaller in size. When an image is too big, there are two things that happen. The browser takes longer to download the image, and the CSS must do extra work to make it the right size. Both delay the site. By comparison, if we make the image the right size to begin with, the CSS has less work to do, the image is smaller to download, and the image still looks good.

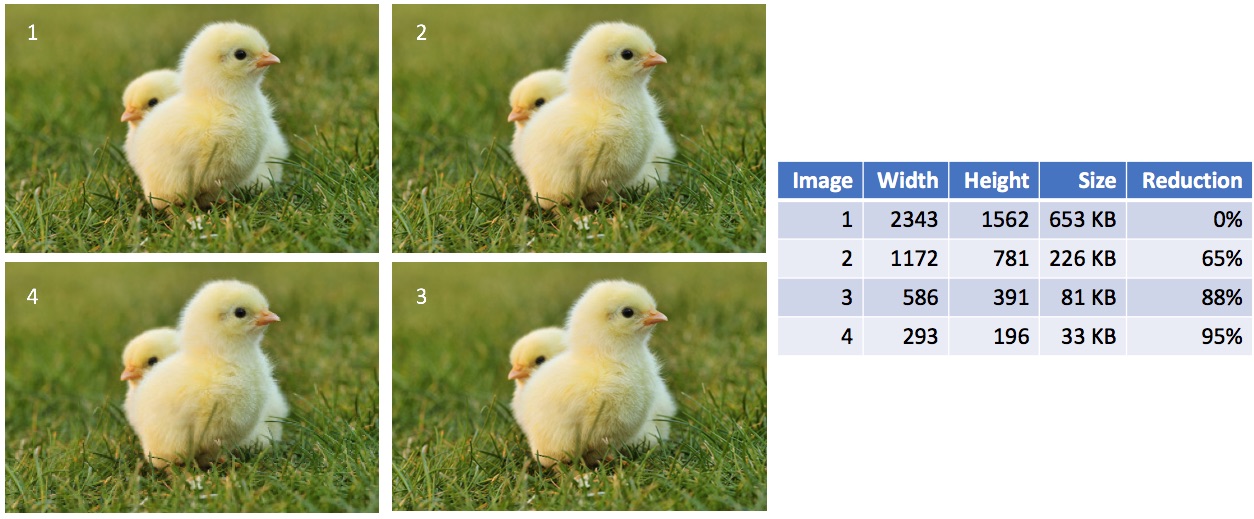

As an example, take a look at this set of images.

The image in the top left is the original size. Each image in a clockwise pattern has 50% lower resolution than the previous image. Image 4 is 95% smaller than image 1; can you see a difference?

If an image is large and prominent on a page, we want a higher resolution. If the image is in a smaller, less prominent location, we could get by with a smaller image. Check your site images to make sure you are using correct resolution images. You can get a good report on inefficient image sizes from GTmetrix.

Image sprites

Another approach to reducing the number of image objects is to use css image sprites. This approach combines multiple images into a single object for download. This means one request might return one picture with 10 smaller pictures. Each individual picture is then referenced with simple css code such as:

![]()

Image sprites are good in that they can reduce the number of http requests to render the site. However, we don’t want to take this too far and combine too many images. Too many combined images means no image can be rendered until all images are downloaded. In contrast, simultaneous connections, allows a browser to render images as soon as possible.

Inline .svg images

Another approach to reduce the delay of downloading images is to use inline .svg images. These images can be embedded within the html and are rendered by most browsers. If they are embedded inline there is no need to request an object for download.

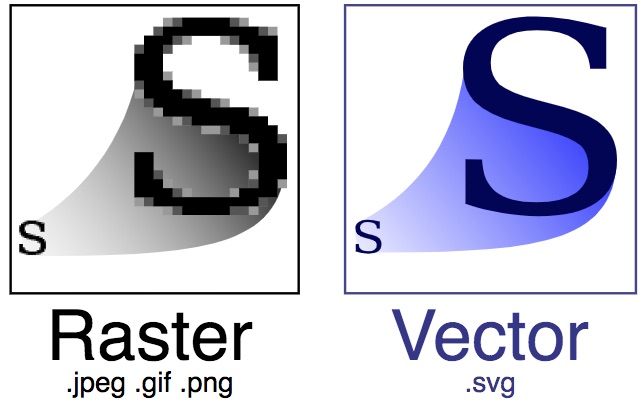

SVG stands for Scalable Vector Graphics. It is a format for 2D graphics that uses vectors and shapes instead of a bitmap image. Bitmap images (like .jpg, .png, and .gif files) use a fixed set of pixels. As you zoom in to the picture of a bitmap, you start to see the pixels. In contrast, a benefit of .svg images is that they are based on shapes so they maintain high quality resolution despite the zoom level. The next picture [1] illustrates the difference between Bitmap and Vector images.

There are tools such as simpleicons.org that have icons in .svg format for many brands. These images can be minimized with a tool such as svgomg which strips extra information out of the .svg file, making it as lean as possible. The final result is HTML code that can be added directly to a website page.

We often use this approach for logos, social media icons or common small images.

Lazy loading

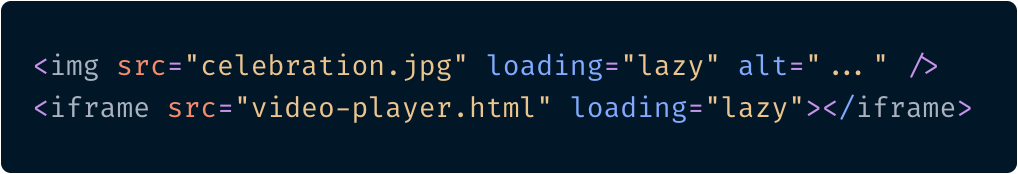

Another way to reduce the impact of images is to use lazy loading. This approach waits to load the image until the image is visible within the browser viewport. This is simple to implement as most browsers have the option built in with the loading attribute.

Combine and Minify

Two other object classes that can be decreased in size are javascript and CSS files. For these file types, we want to combine and “minify” them.

CSS files often contain 3rd party libraries of which only a part are in use. Software like PurgeCSS can remove all the unused CSS, even out of third party libraries.

A common tool for combining and minifying javascript and CSS files is Gulp. Gulp is generally a toolkit for automating time-consuming tasks in development. There are example scripts you can use that will automatically combine and minify your javascript and CSS files. Again, you do not want to go too far combining all files into one.

KEY TAKEAWAYS

There is not a “one-size-fits-all” answer about the ideal size of objects or how many subdomains you should consider. Nor is there a single answer for “the best way” to combine or minify your objects. These depend on your service, what you are offering, and what you need to convey on the website. The course of action is to continually test the site and look for ways to improve.

In general these tips will help increase the speed of a site

- Fewer and smaller objects

- Fewer domains

- Leverage simultaneous downloads

- Reduce, combine, or embed images

- Combine and minify javascript and CSS

This article has focused mostly on how a browser could be improved. At AKF Partners, we help improve the overall product. If you would like help improving or scaling your online experience, we can help. Give us a call.

Sources

- By Yug, modifications by 3247 - Unknown source, CC BY-SA 2.5, https://commons.wikimedia.org/w/index.php?curid=1183592