AI, ML … what does all this mean?

Most business leaders I talk with are mystified by the gobbledygook of acronyms and phrases that they encounter in this space- AI, ML, data science, big data, deep learning, data mining, analytics, narrow AI, artificial general intelligence (AGI). This list goes on and on.

I’ll define these terms in this post and put some structure around the various terms and acronyms.

Artificial Intelligence History and Goals

Artificial Intelligence is a catch-all term that encompasses machines or non-biological entities that can learn and/ or solve tasks. This term was coined in 1956 at the Dartmouth conference and was defined as “… how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves”. This field was inspired by the work that Alan Turing did in 1950 while proposing his famous ‘Turing test’ in his paper Computing Machinery and Intelligence. This test was an imitation game, where two humans and a computer in three separate rooms would have a text-based conversation. If the human evaluator couldn’t tell the difference between the other human and the machine, then the computer is deemed to have passed the test.

One of the most compelling questions for Artificial Intelligence overall (and one for which there is not yet consensus) is whether intelligence needs to be “human-like” to qualify as being intelligent. The question has no bearing on large swaths of the AI landscape. For instance, machine learning need not match human learning just as most mammals can learn but are clearly not human. But the Turing Test implicitly requires human-like imitation and therefore an approximation of human interactions and therefore human “intelligence” whereas other proposed tests recognize intelligence without it being able to approximate a human. Henry Ford didn’t need to understand how horses worked to make a solution that was better than them. That’s good news, as today we do not have a complete enough understanding of the brain to emulate it. Choosing human-like intelligence as a goal has the possibility of both impeding progress and resulting in the AI equivalent of another horse rather than the automobile.

It is often useful to understand the goals of an initiative as an explanation for what it hopes to accomplish. Because science and engineering/application often have different goals, we’ve presented summaries of both for you here:

Scientific Goal of AI: Understand and model existing intelligent systems for the purposes of furthering the understanding and changing ourselves and the world.

Engineering/Application Goal of AI: Apply the existing body of knowledge created by multi-disciplinary study to design new intelligent systems to solve problems.

Let’s start with a diagram that gives a framework to think about the various terms. You should keep this reference diagram handy as you read through the rest of this blog post.

Think of AI as a broad collection of disciplines and approaches, each of these comprising a sub-field. The term “AI” then is used in two ways:

- A broad term that ignores the subfields and addresses human-created (or one day machine-created) intelligence within systems. This is the most common usage of AI in most interactions of non-technical people and in this context the most common test of progress is the Turing Test. But remember – this test assumes that we are attempting to achieve “human-like” vs “alien” intelligence.

- A growing collection of disciplines that collectively define “AI”. This is the correct “technical use” of the term. The most common subfields comprising AI that you will hear, and that we will discuss in this document, are Machine Learning, Expert Systems, Natural Language Processing, Neural Networks, Computer Vision, and Deep Learning.

Subfields and Disciplines within AI:

While not specifically a field, we think it’s first important to discuss two different approaches within AI, specifically Narrow AI vs. General AI. Narrow AI is a machine or algorithm that can solve a specific problem in a limited context e.g., translating text from one language to another. General AI, on the other hand, are algorithms that can be applied to solve a variety of problems in different contexts. AI algorithms generally being used today are the narrow kind that perform a specific task such as translation, recommendation, object recognition etc.

Expert Systems or Symbolic AI: AI research has taken different paths to solve the problem. The first approach was symbolic AI that represented knowledge in a human-readable fashion and encoded logical relationships or “rules of thumb” that could be searched for the optimal answer. A classic example is a semantic network where knowledge is represented as relationships in a network illustrated below. It is obvious from looking at the diagram that it takes a lot of effort to map these relationships and they sometime are contradictory e.g., birds can fly and not fly.

Another approach of this kind is an Expert system, where the knowledge of concepts in a domain was captured by rules that were documented by experts in that area. While this approach works for narrow domains, they are difficult to set up and are inflexible in scaling to newer domains.

An alternative approach under the umbrella of Artificial Intelligence was to create techniques that “learned from raw data” and built models without being explicitly programmed, which leads us to…

Machine Learning (ML)

Machine Learning includes a set of algorithms that can ingest raw data and automatically fit models to the data. These algorithms statistically ‘learn’ from the patterns in the data to provide ‘intelligent’ outputs.

There are three kinds of ML models- supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning is a type of model that learns from a historical series of inputs and the resultant outputs. This is the typical problem that is encountered in the business world. This approach is illustrated below.

Unsupervised learning aims to extract patterns from data and is often referred to as ‘knowledge discovery’.

Reinforcement learning is a framework that learns by trial and error, based on iterative outputs that are evaluated with a reward function that gives feedback to the model. A typical business would not be using these models and they are used mainly for specialized applications like robotics, autonomous vehicles etc. A famous example of such a model is ‘AlphaGo”, a reinforcement learning model that taught itself to play the game Go better than any human player.

Artificial Neural networks are typically a subset of Machine Learning models (though some exceptions exist) that are inspired by the neuronal connections of the biological brain. This isn’t a new discovery and the first modern representation of such a neural network was done in the 1950s. What has changed recently is the availability of large amounts of data on the internet and parallel processing computational capabilities that allows us to train such models with billions of variables.

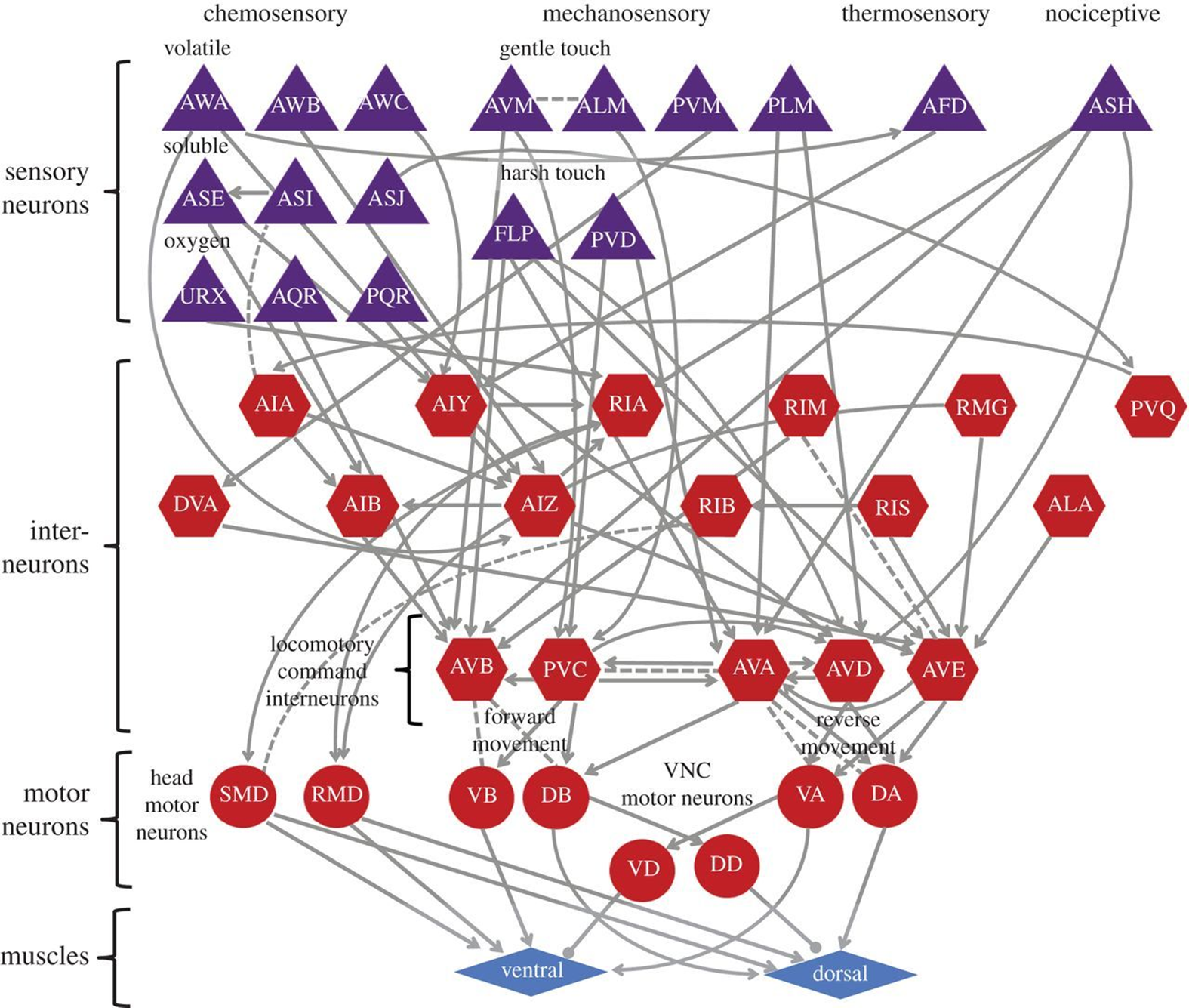

Let’s first look at what such networks are inspired by. In biology, neurons are connected in parallel to each other, are either on and off like a pseudo-digital signal and build on each other’s signal in layers. These parallel connections can increase exponentially and can be quite challenging to study. In fact, one of the most studied biological neural network is a simple nervous system of a worm (C. Elegans) with 302 neurons (vs. ~86 billion neurons for humans). The image below shows the structure of the neural connections sourced from the linked paper. You can see how the sensory neuron feed information forward through intermediate layers of neurons via electro-chemical signals that are finally translated into muscle action through motor neurons. Please make note of the word ‘layers’ since we are going to see it in the context of artificial neural networks.

The image below represents an artificial neural network. It superficially resembles the biological neural network and neural values are encoded as matrices of numbers. The number of layers in the artificial neural network is an indication of how deep the neural network is- hence a deep neural network. The latest neural networks e.g., GPT-3 on which the famous ChatGPT chatbot is based on, has 96 layers- that’s a very deep neural network indeed! Truly an amazing engineering accomplishment! More about Large Language Models and GPT below.

Natural Language Processing (NLP) is a sub-field within AI focused on understanding text and spoken words in the same fashion a human would. This field helps to power text to speech, speech to text, and the semantic evaluation that powers many advertising networks and search engines. Without it, commerce sites wouldn’t offer as many similar products while shopping, and search engine results would exclude anything but near exact matches to text.

Computer Vision: If natural language processing is the “ears” of AI, then vision is the “eyes”. Vision focuses on creating meaning for images. Vision allows your phone to unlock when it recognizes your face, or to classify image objects for a response and to create “meaning” for other programs.

Data science is a catch-all term for a field that uses mathematical, statistical, and scientific computing methods to derive insights (signal) from noisy data.

Are we turning the corner on General AI?

One of the questions that I encounter all the time is: “I have been hearing for years that we are going to have general purpose robots and intelligent algorithms- where is my personal R2D2?”

I have been in the trenches in trying to practically deploy robotics and AI and while we have had some major successes, application at scale, especially in the physical world, has been limited. This is primarily due to the fact that each algorithm has been narrow (Narrow AI) in scope – e.g., recognizing an object with machine vision or converting speech to written text.

New neural network model ‘transforming’ the landscape

There has been considerable excitement in the recent past with Large Language models (LLM) based on a recently developed neural network model called ‘Transformer’. Till recently, these were solved using specialized Natural Language Processing (NLP) models that required considerable work in programming.

An example of model based on the new Transformer framework is GPT-3 (Generative Pre-trained Transformer), which has billions of connected variables (referred to as parameters- quantifying the size and complexity of the model). Since then, the scale of these models have increased further with about a trillion parameters in Google’s T5 model. There are also questions of bias whether such models institutionalize historical discrimination that counterbalance the techno-excitement about this breakthrough modeling approach. We will talk more about bias and explainability in a future post.

What has been interesting is the surprising emergence of ‘perceived intelligence’ above a certain size of the model, even if there are questions about whether the model really understands concepts that it puts together through statistical patterns. In fact, a Google engineer perceived a chatbot model as “having a soul” that caused a furor and unfortunately for him, got him fired. It is quite amazing that a model is built on large-scale human generated word patterns can create a feeling of sentience! In a follow-up post, we will be delving deeper into what ‘intelligence’ means and what type of intelligence is demonstrated by these models. Stay tuned for that!

The same neural network model framework has also been applied to solve for image classification and image generation. Researchers then bridged the gap between text and images, which has been in the news recently, with text-to-image algorithms (Dall-E, Imagen) that can generate photo-realistic images from word cues (another buzzword for this family of models- generative AI). We talked about NLP and computer vision as two disparate sub-fields, but now the Transformer framework could solve both of them! We now have to ask the question: have we cracked the code on general AI?

One general AI model to rule them all?

A recent development flew under the radar, that I believe, has more applicability to wide-spread application of AI in the real-world. DeepMind released a generalist agent called Gato, that uses the same underlying neural network to perform such disparate tasks as manipulating objects with a robot arm, captioning images, playing Atari and having interactive conversations. While this algorithm is still in early stages of development, it is very promising that a single model can be effective in multiple virtual and physical domains. Based on historical trends, it will also be interesting to see what happens as the size of this generalized multi-task model is scaled up.

While there are still quite a few research and engineering challenges to be resolved to get it to a productized level, this could be a turning point in applying a single neural network model in a way that spans the virtual and physical world.

What does that mean for you as a business leader?

It is going to become easier to automate repetitive tasks- both virtual and physical. Since the internet contains a lot of ‘trainable data’, business applications are going to start with virtual tasks. The first applications are likely going to be in customer service, marketing content generation and custom image creation. As automated coding tools like Github Copilot continue to improve, need for coding resources will reduce.

Translating this to the physical world is going to need a bit more work in robotics applications. Unlocking such a generalist framework could accelerate the ability to apply AI in the physical domain in real-world situations and address some of the issues with labor shortages being felt in the world today.

Is this going to be available tomorrow to automate physical tasks like warehouse picking? Probably not, but in three to five years, we should likely see an explosion of automation in real world scenarios. As a business leader, you should be keeping an eye on developments in this space and one easy way to do that is by subscribing to the AKF Partners blog newsletter.

In subsequent posts of this series, we will outline the CEO’s guide to data strategy- three things you need to know to get business wins with data and ML. Then we will talk about a framework to rapidly deploy and support ML models including our brand new AKF ML Cube framework.

If you need help implementing any of the above strategies, contact us. AKF helps companies at various stages of growth, and we would love to be of assistance to yours.